- bonding status check script

-

[root@yj26-ovstest1 ~]# cat bonding-check-test.sh#!/bin/bashBONDING_CHECK_IP=$@PING_CHECK() {forFin$BONDING_CHECK_IPdoN=1while[ $N -ge1 ]doping$F -w 1 -c 1 -q >/dev/nullif[ $? != 0 ] ;thenletN+=1echoNot pingable $FelseN=0fidonedone}if[ -d/proc/net/bonding/] ;thenIFCFGFILE=`ls/etc/sysconfig/network-scripts/ifcfg-*`forTin$IFCFGFILEdosource$Tif["${SLAVE}"="yes"-a -n"${MASTER}"];thenecho"-${DEVICE}">/sys/class/net/${MASTER}/bonding/slaves2>/dev/nullecho"bonding master ${MASTER}'s slave $DEVICE out"PING_CHECKsleep1echo"+${DEVICE}">/sys/class/net/${MASTER}/bonding/slaves2>/dev/nullecho"bonding master ${MASTER}'s slave $DEVICE in"unsetSLAVE DEVICE MASTERfidonefiwhichovs-vsctl >/dev/nullif[ $? == 0 ] ;thenBONDMASTERS=`ovs-appctl bond/list|tail-n +2 |awk'{print $1}'`foriin$BONDMASTERSdoecho$iforIin`ovs-appctl bond/show$i |grep^slave|awk'{print $2}'`doBOND_INTERFACE=${I/:}ip linksetdown $BOND_INTERFACEecho"ovs bonding master ${i}'s slave $BOND_INTERFACE down"PING_CHECKsleep1ip linksetup $BOND_INTERFACEecho"ovs bonding master ${i}'s slave $BOND_INTERFACE up"donedonefi

-

- test

[root@yj26-ovstest2 ~]# bash bonding-check-test.sh 1.1.1.1 3.3.3.3 8.8.8.8bonding master bondtest's slave eth6 outNot pingable 3.3.3.3Not pingable 3.3.3.3Not pingable 3.3.3.3Not pingable 3.3.3.3Not pingable 3.3.3.3Not pingable 3.3.3.3Not pingable 3.3.3.3Not pingable 3.3.3.3bonding master bondtest's slave eth6inbonding master bondtest's slave eth7 outbonding master bondtest's slave eth7inbond-testbrovs bonding master bond-testbr's slave eth3 downovs bonding master bond-testbr's slave eth3 upovs bonding master bond-testbr's slave eth4 downovs bonding master bond-testbr's slave eth4 upbond-testbr2ovs bonding master bond-testbr2's slave eth1 downovs bonding master bond-testbr2's slave eth1 upovs bonding master bond-testbr2's slave eth2 downovs bonding master bond-testbr2's slave eth2 up[root@yj26-ovstest2 ~]# ip -4 -o a1: lo inet 127.0.0.1/8scope host lo\ valid_lft forever preferred_lft forever2: eth0 inet 10.26.1.212/24brd 10.26.1.255 scope global eth0\ valid_lft forever preferred_lft forever10: testbr inet 10.26.2.22/24scope global testbr\ valid_lft forever preferred_lft forever13: bondtest inet 3.3.3.4/24scope global bondtest\ valid_lft forever preferred_lft forever - interface 1개씩 내린 후 ping이 성공 할때까지 보내는 script 임.

centos 6 에서 grub install 하기.

centos6에서 기본적으로 깔려 있는 grub version은 0.97이다.

centos7로 올라가면서 grub version이 2로 올라가게 되는데 이건 설치하는게 굉장히 쉽고 간편하게 되어 있다. grub.conf file도 자동으로 알아서 만들어주는 tool(grub2-mkconfig)이 있다.

centos6에서 grub을 제대로 설치하고, 설정을 해주려면 산넘고 물건너야 비로소 가능하다.

이 글에서는 P2V(physical to virtual)환경을 기준으로 test를 하였다.

일단 root filesystem을 담을 image file을 하나 생성한다.

[root@kvm2 scpark]# truncate -s 15G roro-`date +%Y%m%d`-3.raw [root@kvm2 scpark]# ls -atl --block-size G roro-`date +%Y%m%d`-3.raw -rw-r--r--. 1 root root 15G Aug 18 00:53 roro-20180818-3.raw [root@kvm2 scpark]# du -sh roro-`date +%Y%m%d`-3.raw 0 roro-20180818-3.raw [root@kvm2 scpark]# |

- truncate로 15GB짜리 텅 빈 sparse file을 만들었다.

그리고 root filesystem을 옮겨오기 위한 filesystem을 만들어준다.

[root@kvm2 scpark]# losetup -P -f roro-20180818-3.raw

[root@kvm2 scpark]# lsblk

...

loop2 7:2 0 15G 0 loop

[root@kvm2 scpark]# losetup -a

...

/dev/loop2: [2097]:59871267 (/data3/scpark/roro-20180818-3.raw)

[root@kvm2 grub]# fdisk -l /dev/loop2

Disk /dev/loop2: 16.1 GB, 16106127360 bytes

255 heads, 63 sectors/track, 1958 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Device Boot Start End Blocks Id System

/dev/loop2p1 * 1 131 1047552 83 Linux

/dev/loop2p2 131 1958 14679040+ 83 Linux

[root@kvm2 scpark]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

...

loop2 7:2 0 15G 0 loop

├─loop2p1 259:0 0 1023M 0 loop

└─loop2p2 259:2 0 14G 0 loop

[root@kvm2 scpark]#

|

- losetup을 이용해서 file을 loopback device처럼 보이게 만들었다. 이때 -P옵션은 partscan으로 loopback device에서 partition을 만들었을때 제대로 devname이 잘 보이게끔 해주는 역할을 한다.

- 아래는 fdisk를 이용해서 partition을 만들었다. grub version 0.97에서는 MBR partition table만 지원한다.

그리고 format을 해주자.

[root@kvm2 scpark]# mkfs.ext2 -I 128 /dev/loop2p1

mke2fs 1.42.9 (28-Dec-2013)

Discarding device blocks: done

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=0 blocks, Stripe width=0 blocks

65536 inodes, 261888 blocks

13094 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=268435456

8 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376

Allocating group tables: done

Writing inode tables: done

Writing superblocks and filesystem accounting information: done

[root@kvm2 scpark]# dumpe2fs /dev/loop2p1|grep -i 'inode size'

dumpe2fs 1.42.9 (28-Dec-2013)

Inode size: 128

[root@kvm2 scpark]# mkfs.xfs /dev/loop2p2

meta-data=/dev/loop2p2 isize=512 agcount=4, agsize=917440 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=3669760, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@kvm2 scpark]#

|

- grub version이 낮으면 filesystem의 inode size가 128보다 높을경우 문제가 생길 수 있어서 -I옵션을 이용해서 inode size를 128로 주었다.

mount를 해주고, 기존 physical server에 있는 root filesystem file들을 가져오자.

[root@kvm2 scpark]# mkdir /mnt/loop2 [root@kvm2 scpark]# mount /dev/loop2p2 /mnt/loop2 [root@kvm2 scpark]# mkdir /mnt/loop2/boot [root@kvm2 scpark]# mount /dev/loop2p1 /mnt/loop2/boot [root@kvm2 scpark]# ls -atl /mnt/loop2/ total 4 drwxr-xr-x. 3 root root 18 Aug 18 01:30 . drwxr-xr-x. 7 root root 70 Aug 18 01:29 .. drwxr-xr-x. 3 root root 4096 Aug 18 01:25 boot [root@kvm2 scpark]# df -h ... /dev/loop2p2 14G 33M 14G 1% /mnt/loop2 /dev/loop2p1 1015M 1.3M 963M 1% /mnt/loop2/boot [root@kvm2 scpark]# [root@kvm2 loop2]# rsync -avg --exclude=/proc --exclude=/dev --exclude=/sys --exclude=/boot 192.168.4.13:/ /mnt/loop2/ ... [root@kvm2 loop2]# rsync -avg 192.168.4.13:/boot/ /mnt/loop2/boot/ ... |

- 이때 physical server에서는 DB와 같은 쓰기작업이 일어나는 process가 돌고 있으면 안된다. 만약 rsync 할 때 쓰기 작업이 일어나고 있었으면, 그 쓰기작업이 일어난 filesystem block은 corruption이 일어나 fsck를 해야 될 수도 있다.

이제 grub image를 install 해줄건데 지금 부터가 중요하다.

[root@kvm2 loop2]# for i in `echo proc dev sys` ;do mkdir /mnt/loop2/$i ; mount -B /$i /mnt/loop2/$i ; done [root@kvm2 loop2]# chroot /mnt/loop2/ [root@kvm2 /]# ls bash: ls: command not found [root@kvm2 /]# PATH=/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/root/bin [root@kvm2 /]# ls bin boot dev etc home lib lib64 lost+found media mnt opt proc root sbin selinux srv sys tmp usr var [root@kvm2 /]# pwd / |

- 먼저 chroot에 필요한 directory들을 bind mount 해주고…

- 만약 PATH환경변수가 이상해서 command들이 안듣는다면 환경변수도 맞춰준다.

df를 해보면 physical server에 있던 정보가 그대로 나올텐데 적절하게 맞춰서 고쳐주자.

[root@kvm2 /]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos66-LogVol00

14G 4.5G 9.6G 32% /

devpts 63G 0 63G 0% /dev/pts

tmpfs 63G 0 63G 0% /dev/shm

/dev/sda8 1015M 27M 938M 3% /boot

[root@kvm2 /]# diff -u /etc/mtab_back /etc/mtab

--- /etc/mtab_back 2018-08-18 01:54:01.688939647 +0900

+++ /etc/mtab 2018-08-18 01:52:29.631475978 +0900

@@ -1,7 +1,9 @@

-/dev/mapper/centos66-LogVol00 / ext4 rw 0 0

-/dev/sda8 /boot ext4 rw 0 0

+/dev/loop2p2 / xfs rw 0 0

+/dev/loop2p1 /boot ext2 rw 0 0

proc /proc proc rw 0 0

sysfs /sys sysfs rw 0 0

devpts /dev/pts devpts rw,gid=5,mode=620 0 0

tmpfs /dev/shm tmpfs rw,rootcontext="system_u:object_r:tmpfs_t:s0" 0 0

none /proc/sys/fs/binfmt_misc binfmt_misc rw 0 0

[root@kvm2 /]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/loop2p2 14G 4.5G 9.6G 32% /

/dev/loop2p1 1015M 27M 938M 3% /boot

devpts 63G 0 63G 0% /dev/pts

tmpfs 63G 0 63G 0% /dev/shm

[root@kvm2 /]#

|

이상태에서 grub install을 하면 아래와 같은 error가 날 수 있다.

[root@kvm2 /]# grub-install --version grub-install (GNU GRUB 0.97) [root@kvm2 /]# grub-install /dev/loop2 /dev/loop2 does not have any corresponding BIOS drive. |

- 이럴 경우 device.map과 mknod로 임의의 block file을 만들어준다.

-

[root@kvm2 grub]# !diff diff -u /boot/grub/device.map_backup /boot/grub/device.map --- /boot/grub/device.map_backup 2018-08-18 02:26:02.000000000 +0900 +++ /boot/grub/device.map 2018-08-18 02:30:43.000000000 +0900 @@ -1 +1 @@ -(hd0) /dev/sda +(hd0) /dev/vda [root@kvm2 grub]# [root@kvm2 grub]# ls -alt /dev/loop2* brw-rw----. 1 root disk 259, 8 Aug 18 02:20 /dev/loop2p2 brw-rw----. 1 root disk 259, 7 Aug 18 02:20 /dev/loop2p1 brw-rw----. 1 root disk 7, 3 Aug 18 02:19 /dev/loop2 [root@kvm2 grub]# mknod /dev/vda b 7 3 [root@kvm2 grub]# mknod /dev/vda1 b 259 7 [root@kvm2 grub]# mknod /dev/vda2 b 259 8 [root@kvm2 grub]# ls -atl /dev/vda* /dev/loop2* brw-r--r--. 1 root root 259, 8 Aug 18 02:33 /dev/vda2 brw-r--r--. 1 root root 259, 7 Aug 18 02:33 /dev/vda1 brw-r--r--. 1 root root 7, 3 Aug 18 02:32 /dev/vda brw-rw----. 1 root disk 259, 8 Aug 18 02:20 /dev/loop2p2 brw-rw----. 1 root disk 259, 7 Aug 18 02:20 /dev/loop2p1 brw-rw----. 1 root disk 7, 3 Aug 18 02:19 /dev/loop2 [root@kvm2 grub]#

- device.map은 grub-install script가 돌아갈 때 참조하는 device이다. hd0은 첫번째 device라는 뜻으로 앞으로 migraion할 가상머신에서는 첫번째 booting disk가 될것이니 hd0으로 해준것이다. device.map안에 sda를 vda로 바꿔준 이유는 현재 사용하고 있지 않는 devname이 필요하기 때문이었다. loop2는 마지막에 2라는 숫자가 들어가기 때문에 파티션을 만들게 되면 “loop2p1” 이런식으로 p1이라는 post fix가 붙게 되는데 이러면 grub-install script가 돌아갈 때 또 오류가 난다. 그래서 vda를 쓴것이다. vda가 아니라 sdy 등 쓰고있지 않는 devname 아무거나 쓰면 된다.

- mknod를 이용해서 grub을 install할 device의 major minor number에 대칭되게 block device file을 만들어 준다.

이제 grub.conf file을 수정 해주고…

[root@kvm2 grub]# diff -u grub.conf_backup grub.conf

--- grub.conf_backup 2018-08-18 02:51:35.000000000 +0900

+++ grub.conf 2018-08-18 02:53:22.000000000 +0900

@@ -13,5 +13,5 @@

hiddenmenu

title CentOS 6 (2.6.32-504.el6.x86_64)

root (hd0,0)

- kernel /vmlinuz-2.6.32-504.el6.x86_64 ro root=/dev/mapper/centos66-LogVol00 rd_LVM_LV=rhel73/swap rd_NO_LUKS KEYBOARDTYPE=pc KEYTABLE=us LANG=en_US.UTF-8 rd_NO_MD SYSFONT=latarcyrheb-sun16 rd_LVM_LV=cent74/swap crashkernel=auto rd_LVM_LV=centos66/LogVol00 rd_NO_DM rhgb quiet

+ kernel /vmlinuz-2.6.32-504.el6.x86_64 ro root=UUID=1fbfaba7-3479-4822-822e-014bc2a61fb1 KEYBOARDTYPE=pc KEYTABLE=us LANG=en_US.UTF-8 crashkernel=auto noquiet

initrd /initramfs-2.6.32-504.el6.x86_64.img

[root@kvm2 grub]#

|

- 여기서 root (hd0,0)은 root filesystem이 아니라, boot partition이 있는 partition을 지정해준다. 만약 boot partition이 2번째 partition이라고 한다면 (hd0,1)이 되어야 할것이다.

- 그리고 kernel과 initrd file의 위치도 중요한데, 위에서 설정한 root partition의 어디에 file이 있는지 정확하게 지정해야 한다. 만약 boot partition이 따로 없고 root filesystem의 boot directory에 같이 들어가 있다고 한다면 kernel /boot/vmlinuz…. , initrd /boot/initramfs… 이런식으로 들어가야 할것이다.

- root filesystem의 devname 또는 UUID를 넣어줘야 하는데 UUID가 범용적으로 잘 되니 UUID를 넣도록 하자. 뒤에 swap이나 lvm에 관련된 옵션들은 다 삭제해준다.

- rhgb는 red hat graphical booiting이라는 뜻으로 부팅 시 centos 그림아래 booting bar가 나오게끔 해주는 것이며, quiet은 메세지를 띄위지 말라는 뜻이다. noquiet은 그 반대

이제 grub을 설치 해보자.

[root@kvm2 /]# grub-install /dev/vda Installing for i386-pc platform. Installation finished. No error reported. |

- error 없이 잘 설치된것을 볼 수 있다.

여기까지 잘 되었으면 문제없이 grub을 넘길 수 있을 것이다.

이제 initramfs와 /etc/fstab selinux 등의 문제들을 수정해 주면 부팅이 잘 될것이다..!

참고

bash의 — 쓰임세.

bash에서

—

의 쓰임세를 아시나요?

저게 나오면 그냥 그러려니 하고 항상 지나쳤었는데 오늘에야 조금 이해를 한것 같습니다.

bash manual에 보면

–는 옵션의 끝을 나타낸다. 이 이후에 오는것은 위치 매개변수(argument)로만 인식 한다.

라고 되어 있는데요. 이게 뭔뜻이냐면…

1. 먼저 -test라는 directory를 만들 때 -t를 옵션으로 인식을 해서 만들어 지지가 않습니다.

[root@kvm2 test]# mkdir -test

mkdir: 부적절한 옵션 — ‘t’

Try ‘mkdir –help’ for more information.

2. 중간에 — 를 넣어서 옵션의 끝을 알려주고 다시 해보면 이렇게 됩니다.

[root@kvm2 test]# mkdir — -test

[root@kvm2 test]# ls -alt

합계 0

drwxr-xr-x. 2 root root 6 8월 7 14:12 -test

drwxr-xr-x. 3 root root 19 8월 7 14:12 .

drwxr-xr-x. 6 root root 51 8월 7 14:12 ..

3. ls로 확인 할 때도 마찬 가지 인데요.

[root@kvm2 test]# ls -atl -test

ls: 부적절한 옵션 — ‘e’

Try ‘ls –help’ for more information.

[root@kvm2 test]# ls -atl — -test

합계 0

drwxr-xr-x. 2 root root 6 8월 7 14:12 .

drwxr-xr-x. 3 root root 19 8월 7 14:12 ..

[root@kvm2 test]#

요렇게 쓸 수가 있습니다. 🙂

redhat openstack 13 queens install manual

준비

- hypervisor centos7

- nested kvm setup

- vm instance

-

[root@kvm2 /]# LANG=C virsh list |grep -i yj23 484 yj23-osp13-con1 running 485 yj23-osp13-com2 running 486 yj23-osp13-con2 running 487 yj23-osp13-con3 running 488 yj23-osp13-deploy running 489 yj23-osp13-ceph1 running 490 yj23-osp13-ceph2 running 491 yj23-osp13-swift1 running 492 yj23-osp13-ceph3 running 493 yj23-osp13-com1 running [root@kvm2 /]#

-

사양

[deploy] yj23-osp13-deploy cpu=4 mem=16000 network_ipaddr="10.23.1.3" [openstack] yj23-osp13-con1 cpu=4 mem=12288 network_ipaddr="10.23.1.11" yj23-osp13-con2 cpu=4 mem=12288 network_ipaddr="10.23.1.12" yj23-osp13-con3 cpu=4 mem=12288 network_ipaddr="10.23.1.13" yj23-osp13-com1 cpu=4 mem=6000 network_ipaddr="10.23.1.21" yj23-osp13-com2 cpu=4 mem=6000 network_ipaddr="10.23.1.22" [ceph] yj23-osp13-ceph1 cpu=4 mem=4096 network_ipaddr="10.23.1.31" yj23-osp13-ceph2 cpu=4 mem=4096 network_ipaddr="10.23.1.32" yj23-osp13-ceph3 cpu=4 mem=4096 network_ipaddr="10.23.1.33" yj23-osp13-swift1 cpu=4 mem=4096 network_ipaddr="10.23.1.34"

-

-

vm interface, 모든 vm 동일

[root@kvm2 /]# virsh domiflist yj23-osp13-con1 인터페이스 유형 소스 모델 MAC ------------------------------------------------------- vnet0 network yj23 virtio 52:54:00:fc:f1:89 vnet1 network yj23 virtio 52:54:00:d5:76:ba vnet2 network yj23 virtio 52:54:00:b3:36:61 vnet3 network yj23 virtio 52:54:00:fe:35:58 vnet4 network yj23 virtio 52:54:00:b3:2d:1c vnet5 bridge br0 virtio 52:54:00:52:82:f6

-

network 환경

[root@kvm2 /]# virsh net-info yj23 이름: yj23 UUID: 28ebbc19-72d3-5953-b43b-507e6f0553dc 활성화: 예 Persistent: 예 Autostart: 예 브리지: virbr37 [root@kvm2 /]# ip a sh virbr37 2068: virbr37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 52:54:f2:5f:4e:71 brd ff:ff:ff:ff:ff:ff inet 10.23.1.1/24 brd 10.23.1.255 scope global virbr37 valid_lft forever preferred_lft forever [root@kvm2 /]# [root@kvm2 /]# ip a sh br0 6: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 68:b5:99:70:5a:0e brd ff:ff:ff:ff:ff:ff inet 192.168.92.1/16 brd 192.168.255.255 scope global br0 valid_lft forever preferred_lft forever ... [root@kvm2 /]# -

br0에서 dhcp protocol 못쓰게끔 설정

[root@kvm2 /]# arp -n |grep -i 192.168.0.1 192.168.0.111 ether a0:af:bd:e6:d7:19 C br0 192.168.0.1 ether 90:9f:33:65:47:02 C br0 192.168.0.106 ether 94:57:a5:5c:34:5c C br0 [root@kvm2 /]# iptables -t filter -I FORWARD -p udp -m multiport --ports 67,68 -i br0 -o br0 -m mac --mac-source 90:9f:33:65:47:02 -j REJECT

osp 배포 중 dhcp로 ip를 받아가기 때문에 dhcp server(192.168.0.1)가 따로 있으면 안된다.

-

repository 설정

[root@yj23-osp13-deploy yum.repos.d]# cat osp13.repo [osp13] name=osp13 baseurl=http://192.168.0.106/ gpgcheck=0 enabled=1 [root@yj23-osp13-deploy yum.repos.d]# [root@yj23-osp13-deploy yum.repos.d]# curl 192.168.0.106 <!DOCTYPE html PUBLIC "-//W3C//DTD HTML 3.2 Final//EN"><html> <title>Directory listing for /</title> <body> <h2>Directory listing for /</h2> <hr> <ul> <li><a href="nohup.out">nohup.out</a> <li><a href="repo-start.sh">repo-start.sh</a> <li><a href="repodata/">repodata/</a> <li><a href="rhel-7-server-extras-rpms/">rhel-7-server-extras-rpms/</a> <li><a href="rhel-7-server-nfv-rpms/">rhel-7-server-nfv-rpms/</a> <li><a href="rhel-7-server-openstack-13-rpms/">rhel-7-server-openstack-13-rpms/</a> <li><a href="rhel-7-server-rh-common-rpms/">rhel-7-server-rh-common-rpms/</a> <li><a href="rhel-7-server-rhceph-3-mon-rpms/">rhel-7-server-rhceph-3-mon-rpms/</a> <li><a href="rhel-7-server-rhceph-3-osd-rpms/">rhel-7-server-rhceph-3-osd-rpms/</a> <li><a href="rhel-7-server-rhceph-3-tools-rpms/">rhel-7-server-rhceph-3-tools-rpms/</a> <li><a href="rhel-7-server-rpms/">rhel-7-server-rpms/</a> <li><a href="rhel-ha-for-rhel-7-server-rpms/">rhel-ha-for-rhel-7-server-rpms/</a> </ul> <hr> </body> </html> [root@yj23-osp13-deploy yum.repos.d]# [root@yj23-osp13-deploy yum.repos.d]# yum repolist Loaded plugins: search-disabled-repos repo id repo name status osp13 osp13 23,866 repolist: 23,866 [root@yj23-osp13-deploy yum.repos.d]#

만약 192.168.0.106에서 repository가 꺼져 있다면

[root@cloud-test02 ~]# cd /storage/repository/ [root@cloud-test02 repository]# ls nohup.out repodata rhel-7-server-nfv-rpms rhel-7-server-rh-common-rpms rhel-7-server-rhceph-3-osd-rpms rhel-7-server-rpms repo-start.sh rhel-7-server-extras-rpms rhel-7-server-openstack-13-rpms rhel-7-server-rhceph-3-mon-rpms rhel-7-server-rhceph-3-tools-rpms rhel-ha-for-rhel-7-server-rpms [root@cloud-test02 repository]# [root@cloud-test02 repository]# nohup bash repo-start.sh [root@cloud-test02 repository]# netstat -ntpl |grep -i 80 tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 13063/python tcp 0 0 192.168.39.1:53 0.0.0.0:* LISTEN 2180/dnsmasq tcp 0 0 10.10.10.1:53 0.0.0.0:* LISTEN 1980/dnsmasq [root@cloud-test02 repository]#

undercloud install

-

stack 사용자 생성

[root@yj23-osp13-deploy ~]# useradd stack [root@yj23-osp13-deploy ~]# passwd stack stack 사용자의 비밀 번호 변경 중 새 암호: 잘못된 암호: 암호는 8 개의 문자 보다 짧습니다 새 암호 재입력: passwd: 모든 인증 토큰이 성공적으로 업데이트 되었습니다. [root@yj23-osp13-deploy ~]# [root@yj23-osp13-deploy ~]# echo "stack ALL=(root) NOPASSWD:ALL" | tee -a /etc/sudoers.d/stack stack ALL=(root) NOPASSWD:ALL [root@yj23-osp13-deploy ~]# chmod 0440 /etc/sudoers.d/stack [root@yj23-osp13-deploy ~]# su - stack [stack@yj23-osp13-deploy ~]$

-

hostname setup

[root@yj23-osp13-deploy ~]# hostnamectl Static hostname: yj23-osp13-deploy.test.dom Icon name: computer-vm Chassis: vm Machine ID: ef07e670f4544423b9518b9501aefa68 Boot ID: 485c85b2dd31415e9ea59d71adb70b34 Virtualization: kvm Operating System: Red Hat Enterprise Linux Server 7.3 (Maipo) CPE OS Name: cpe:/o:redhat:enterprise_linux:7.3:GA:server Kernel: Linux 3.10.0-514.21.2.el7.x86_64 Architecture: x86-64 [root@yj23-osp13-deploy ~]# [root@yj23-osp13-deploy ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.23.1.3 yj23-osp13-deploy yj23-osp13-deploy.test.dom [root@yj23-osp13-deploy ~]# -

yum update

[root@yj23-osp13-deploy ~]# yum update && reboot

-

director package install

[root@yj23-osp13-deploy ~]# sudo yum install -y python-tripleoclient [root@yj23-osp13-deploy ~]# sudo yum install -y ceph-ansible

-

undercloud.conf

[stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#' undercloud.conf [DEFAULT] undercloud_hostname = yj23-osp13-deploy.test.dom local_ip = 192.1.1.1/24 undercloud_public_host = 192.1.1.2 undercloud_admin_host = 192.1.1.3 undercloud_nameservers = 10.23.1.1 undercloud_ntp_servers = ['0.kr.pool.ntp.org','1.asia.pool.ntp.org','0.asia.pool.ntp.org'] subnets = ctlplane-subnet local_subnet = ctlplane-subnet local_interface = eth1 local_mtu = 1500 inspection_interface = br-ctlplane enable_ui = true [auth] undercloud_db_password = dbpassword undercloud_admin_password = adminpassword [ctlplane-subnet] cidr = 192.1.1.0/24 dhcp_start = 192.1.1.5 dhcp_end = 192.1.1.24 inspection_iprange = 192.1.1.100,192.1.1.120 gateway = 192.1.1.1 masquerade = true [stack@yj23-osp13-deploy ~]$

-

install

[stack@yj23-osp13-deploy ~]$ openstack undercloud install ... 2018-07-24 17:08:59,187 INFO: Configuring an hourly cron trigger for tripleo-ui logging 2018-07-24 17:09:01,885 INFO: ############################################################################# Undercloud install complete. The file containing this installation's passwords is at /home/stack/undercloud-passwords.conf. There is also a stackrc file at /home/stack/stackrc. These files are needed to interact with the OpenStack services, and should be secured. #############################################################################

확인

[stack@yj23-osp13-deploy ~]$ . stackrc (undercloud) [stack@yj23-osp13-deploy ~]$ openstack service list +----------------------------------+------------------+-------------------------+ | ID | Name | Type | +----------------------------------+------------------+-------------------------+ | 1ffe438127cd403782b235843520d65a | neutron | network | | 40cae732cf7147f2b1245daae08f2b48 | glance | image | | 4d1acdb9e542432ea7d367f1a30b1eb9 | swift | object-store | | 5ad44f0026cb467e90bbe54546775292 | zaqar-websocket | messaging-websocket | | 5c1ba112b273452e8ef571a2cd7ae482 | ironic | baremetal | | 6155c7e482db4c4c864ad89f658e76a1 | keystone | identity | | 71cfe67e036240c1a8c19bd6455dd7b5 | heat-cfn | cloudformation | | 7fa6f139dd8446568d5fcb33932b8d04 | ironic-inspector | baremetal-introspection | | b5a2f5ee4fe7419d859afa78de9bccc3 | nova | compute | | da76503730cf409b9e71f4eb4008b069 | heat | orchestration | | e50567345da74e6caf241c5285f75425 | placement | placement | | ec06185159224ca4a048956b50b0fdfe | mistral | workflowv2 | | f87d1e0aceef4d42b7c2fca150be98e8 | zaqar | messaging | +----------------------------------+------------------+-------------------------+ (undercloud) [stack@yj23-osp13-deploy ~]$

-

overcloud image 등록

(undercloud) [stack@director ~]$ sudo yum install rhosp-director-images rhosp-director-images-ipa (undercloud) [stack@director ~]$ cd ~/images (undercloud) [stack@director images]$ for i in /usr/share/rhosp-director-images/overcloud-full-latest-13.0.tar /usr/share/rhosp-director-images/ironic-python-agent-latest-13.0.tar; do tar -xvf $i; done (undercloud) [stack@director images]$ openstack overcloud image upload --image-path /home/stack/images/

image 확인.

(undercloud) [stack@yj23-osp13-deploy ~]$ openstack image list +--------------------------------------+------------------------+--------+ | ID | Name | Status | +--------------------------------------+------------------------+--------+ | 78266ebd-d137-4005-88b7-e685ac4b6a47 | bm-deploy-kernel | active | | 32edeabd-5dcb-4850-b6ca-b3658b2c9c51 | bm-deploy-ramdisk | active | | 02588a46-8938-4af4-b993-4513de86a9d1 | overcloud-full | active | | 8573b92e-8e88-4a83-a6d5-c81e9e3c0c84 | overcloud-full-initrd | active | | 765bb198-a81f-4f0a-97b5-78b765281ad1 | overcloud-full-vmlinuz | active | +--------------------------------------+------------------------+--------+ (undercloud) [stack@yj23-osp13-deploy ~]$ (undercloud) [stack@yj23-osp13-deploy ~]$ ls -atl /httpboot/ total 421700 -rw-r--r--. 1 root root 425422545 Jul 25 09:08 agent.ramdisk drwxr-xr-x. 2 ironic ironic 86 Jul 25 09:08 . -rwxr-xr-x. 1 root root 6385968 Jul 25 09:08 agent.kernel -rw-r--r--. 1 ironic ironic 758 Jul 24 16:59 boot.ipxe -rw-r--r--. 1 ironic-inspector ironic-inspector 461 Jul 24 16:54 inspector.ipxe dr-xr-xr-x. 19 root root 256 Jul 24 16:54 .. (undercloud) [stack@yj23-osp13-deploy ~]$

-

control plane dns setup

(undercloud) [stack@yj23-osp13-deploy ~]$ openstack subnet set --dns-nameserver 10.23.1.1 ctlplane-subnet (undercloud) [stack@yj23-osp13-deploy ~]$ openstack subnet show ctlplane-subnet +-------------------+-------------------------------------------------------+ | Field | Value | +-------------------+-------------------------------------------------------+ | allocation_pools | 192.1.1.5-192.1.1.24 | | cidr | 192.1.1.0/24 | | created_at | 2018-07-24T08:06:34Z | | description | | | dns_nameservers | 10.23.1.1 | | enable_dhcp | True | | gateway_ip | 192.1.1.1 | | host_routes | destination='169.254.169.254/32', gateway='192.1.1.1' | | id | 21ebf884-52f3-41fe-a1a7-6222b26b9ea5 | | ip_version | 4 | | ipv6_address_mode | None | | ipv6_ra_mode | None | | name | ctlplane-subnet | | network_id | 20626a78-3602-43e1-88cb-f19bbfa6e0a1 | | project_id | b06059dbd4554a6dbfe6381ab8c7b8ab | | revision_number | 1 | | segment_id | None | | service_types | | | subnetpool_id | None | | tags | | | updated_at | 2018-07-25T00:13:22Z | +-------------------+-------------------------------------------------------+

10.23.1.1은 hypervisor 내부 dns, 외부와 연동되어 있어서 최상위 dns에 질의가 가능하다. (google이나 naver 질의 가능)

-

docker image upload

openstack overcloud container image prepare \ --namespace=registry.access.redhat.com/rhosp13 \ --tag-from-label {version}-{release} \ --push-destination 192.1.1.1:8787 \ --output-images-file /home/stack/templates/container-images-ovn-octavia-manila-barbican.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/services/barbican.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/barbican-backend-simple-crypto.yaml \ -e /home/stack/templates/barbican-configure.yml \ --output-env-file=/home/stack/templates/overcloud_images_local_barbican.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/services-docker/neutron-ovn-dvr-ha.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/services-docker/octavia.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/services-docker/manila.yaml -e /home/stack/templates/node-info.yaml \ -e /home/stack/templates/overcloud_images_local_barbican.yaml \ -e /home/stack/templates/node-info.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \ -e /home/stack/templates/network-environment.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/services-docker/neutron-ovn-dvr-ha.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/services/sahara.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/low-memory-usage.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/manila-cephfsnative-config-docker.yaml \ -e /home/stack/templates/external-ceph.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/services/barbican.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/barbican-backend-simple-crypto.yaml \ -e /home/stack/templates/barbican-configure.yml \사용할 environment file을 추가하면 알아서 그에 필요한 docker image를 download 해온다.

-

introspection

undercloud에서 overcloud대상 node들의 전원 관리를 할 수 있게 hypervisor에 vbmc를 설정한다.[root@kvm2 ~]# yum install -y python-virtualbmc [root@kvm2 ~]# vbmc add yj23-osp13-con1 --port 4041 --username admin --password admin [root@kvm2 ~]# vbmc add yj23-osp13-con2 --port 4042 --username admin --password admin [root@kvm2 ~]# vbmc add yj23-osp13-con3 --port 4043 --username admin --password admin [root@kvm2 ~]# vbmc add yj23-osp13-com1 --port 4044 --username admin --password admin [root@kvm2 ~]# vbmc add yj23-osp13-com2 --port 4045 --username admin --password admin [root@kvm2 ~]# vbmc add yj23-osp13-swift1 --port 4046 --username admin --password admin [root@kvm2 ~]# [root@kvm2 ~]# vbmc start yj23-osp13-con1 [root@kvm2 ~]# 2018-07-25 09:47:40,089.089 19742 INFO VirtualBMC [-] Virtual BMC for domain yj23-osp13-con1 started vbmc start yj23-osp13-con2 [root@kvm2 ~]# 2018-07-25 09:47:41,473.473 19762 INFO VirtualBMC [-] Virtual BMC for domain yj23-osp13-con2 started vbmc start yj23-osp13-con3 [root@kvm2 ~]# 2018-07-25 09:47:42,747.747 19780 INFO VirtualBMC [-] Virtual BMC for domain yj23-osp13-con3 started vbmc start yj23-osp13-com1 [root@kvm2 ~]# 2018-07-25 09:47:45,677.677 19822 INFO VirtualBMC [-] Virtual BMC for domain yj23-osp13-com1 started vbmc start yj23-osp13-com2 [root@kvm2 ~]# 2018-07-25 09:47:46,635.635 19840 INFO VirtualBMC [-] Virtual BMC for domain yj23-osp13-com2 started vbmc start yj23-osp13-swift1 [root@kvm2 ~]# 2018-07-25 09:47:48,486.486 19872 INFO VirtualBMC [-] Virtual BMC for domain yj23-osp13-swift1 started [root@kvm2 ~]# vbmc list +-------------------+---------+---------+------+ | Domain name | Status | Address | Port | +-------------------+---------+---------+------+ | yj23-osp13-com1 | running | :: | 4044 | | yj23-osp13-com2 | running | :: | 4045 | | yj23-osp13-con1 | running | :: | 4041 | | yj23-osp13-con2 | running | :: | 4042 | | yj23-osp13-con3 | running | :: | 4043 | | yj23-osp13-swift1 | running | :: | 4046 | [root@kvm2 ~]# netstat -nupl |grep -i udp6 0 0 :::4041 :::* 19742/python2 udp6 0 0 :::4042 :::* 19762/python2 udp6 0 0 :::4043 :::* 19780/python2 udp6 0 0 :::4044 :::* 19822/python2 udp6 0 0 :::4045 :::* 19840/python2 udp6 0 0 :::4046 :::* 19872/python2

overcloud node등록

(undercloud) [stack@yj21-osp13-deploy ~]$ cat instackenv.json { "nodes": [ { "pm_type": "pxe_ipmitool", "mac": [ "52:54:00:fc:f1:89" ], "pm_user": "admin", "pm_password": "admin", "pm_addr": "10.23.1.1", "pm_port": "4041", "name": "yj23-osp13-con1" }, { "pm_type": "pxe_ipmitool", "mac": [ "52:54:00:60:46:98" ], "pm_user": "admin", "pm_password": "admin", "pm_addr": "10.23.1.1", "pm_port": "4042", "name": "yj23-osp13-con2" }, { "pm_type": "pxe_ipmitool", "mac": [ "52:54:00:ea:55:06" ], "pm_user": "admin", "pm_password": "admin", "pm_addr": "10.23.1.1", "pm_port": "4043", "name": "yj23-osp13-con3" }, { "pm_type": "pxe_ipmitool", "mac": [ "52:54:00:9e:ad:2e" ], "pm_user": "admin", "pm_password": "admin", "pm_addr": "10.23.1.1", "pm_port": "4044", "name": "yj23-osp13-com1" }, { "pm_type": "pxe_ipmitool", "mac": [ "52:54:00:88:23:eb" ], "pm_user": "admin", "pm_password": "admin", "pm_addr": "10.23.1.1", "pm_port": "4045", "name": "yj23-osp13-com2" }, { "pm_type": "pxe_ipmitool", "mac": [ "52:54:00:b1:94:6c" ], "pm_user": "admin", "pm_password": "admin", "pm_addr": "10.23.1.1", "pm_port": "4046", "name": "yj23-osp13-swift1" } ] } (undercloud) [stack@yj23-osp13-deploy ~]$ openstack overcloud node import instackenv.json Started Mistral Workflow tripleo.baremetal.v1.register_or_update. Execution ID: 247b7961-f28a-4bd5-8f28-a2efee4337be Waiting for messages on queue 'tripleo' with no timeout. 6 node(s) successfully moved to the "manageable" state. Successfully registered node UUID bc5e2082-a2d1-4b34-b59d-31a82bcedf7b Successfully registered node UUID 5d9c871d-fb67-4787-a24a-cbfd6b96334f Successfully registered node UUID 1747b769-acc2-45d6-9563-b135fb0074e1 Successfully registered node UUID 9b02e6be-82a9-4ab8-9f51-39bbabede331 Successfully registered node UUID c469e049-798c-4033-84f9-0e6035e00e01 Successfully registered node UUID e91c9f74-4c34-4039-a69e-05c8b6d586f9 (undercloud) [stack@yj23-osp13-deploy ~]$ openstack baremetal node list +--------------------------------------+-------------------+---------------+-------------+--------------------+-------------+ | UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance | +--------------------------------------+-------------------+---------------+-------------+--------------------+-------------+ | bc5e2082-a2d1-4b34-b59d-31a82bcedf7b | yj23-osp13-con1 | None | power on | manageable | False | | 5d9c871d-fb67-4787-a24a-cbfd6b96334f | yj23-osp13-con2 | None | power on | manageable | False | | 1747b769-acc2-45d6-9563-b135fb0074e1 | yj23-osp13-con3 | None | power on | manageable | False | | 9b02e6be-82a9-4ab8-9f51-39bbabede331 | yj23-osp13-com1 | None | power on | manageable | False | | c469e049-798c-4033-84f9-0e6035e00e01 | yj23-osp13-com2 | None | power on | manageable | False | | e91c9f74-4c34-4039-a69e-05c8b6d586f9 | yj23-osp13-swift1 | None | power on | manageable | False | +--------------------------------------+-------------------+---------------+-------------+--------------------+-------------+introspection

(undercloud) [stack@yj23-osp13-deploy ~]$ openstack overcloud node introspect --all-manageable --provide Waiting for introspection to finish... Started Mistral Workflow tripleo.baremetal.v1.introspect_manageable_nodes. Execution ID: 36593c2f-7acc-4a43-bdde-9991f7468280 Waiting for messages on queue 'tripleo' with no timeout. Introspection of node 96a5d2bf-a255-4a4a-a8bf-1b356c0b1a18 completed. Status:SUCCESS. Errors:None Introspection of node a9a79d92-f815-41e1-afdf-70af3da3ae59 completed. Status:SUCCESS. Errors:None Introspection of node 25a450b4-b766-431d-a16d-cf06e256dc6b completed. Status:SUCCESS. Errors:None Introspection of node f4083c08-6693-4ec8-8ad9-dea669e13cc1 completed. Status:SUCCESS. Errors:None Introspection of node c69be2c7-5ab9-4cd1-a5bf-107a266427cf completed. Status:SUCCESS. Errors:None Introspection of node 9034a333-10df-4d9b-bba7-02fbd2a1277b completed. Status:SUCCESS. Errors:None Successfully introspected 6 node(s).

이 때 overcloud node들을 한번 씩 다 켜서 H/W 정보를 가져온다.

[root@kvm2 ~]# virsh list --all |grep -i yj23 488 yj23-osp13-deploy 실행중 495 yj23-osp13-ceph3 실행중 496 yj23-osp13-ceph2 실행중 497 yj23-osp13-ceph1 실행중 541 yj23-osp13-com1 실행중 542 yj23-osp13-com2 실행중 543 yj23-osp13-con1 실행중 544 yj23-osp13-con2 실행중 - yj23-osp13-con3 종료 - yj23-osp13-swift1 종료

-

root disk 지정

(undercloud) [stack@yj23-osp13-deploy ~]$ openstack baremetal introspection data save yj23-osp13-con1 | jq ".inventory.disks" [ { "size": 53687091200, "serial": null, "wwn": null, "rotational": true, "vendor": "0x1af4", "name": "/dev/vda", "wwn_vendor_extension": null, "hctl": null, "wwn_with_extension": null, "by_path": "/dev/disk/by-path/pci-0000:00:0b.0", "model": "" } ] (undercloud) [stack@yj23-osp13-deploy ~]$ echo 53687091200/1024/1024/1024 | bc 50 (undercloud) [stack@yj23-osp13-deploy ~]$ (undercloud) [stack@yj23-osp13-deploy ~]$ openstack baremetal node set --property root_device='{"size": "50"}' yj23-osp13-con1 (undercloud) [stack@yj23-osp13-deploy ~]$ openstack baremetal node set --property root_device='{"size": "50"}' yj23-osp13-con2 (undercloud) [stack@yj23-osp13-deploy ~]$ openstack baremetal node set --property root_device='{"size": "50"}' yj23-osp13-con3 (undercloud) [stack@yj23-osp13-deploy ~]$ openstack baremetal node set --property root_device='{"size": "50"}' yj23-osp13-com1 (undercloud) [stack@yj23-osp13-deploy ~]$ openstack baremetal node set --property root_device='{"size": "50"}' yj23-osp13-com2 (undercloud) [stack@yj23-osp13-deploy ~]$ openstack baremetal node set --property root_device='{"size": "50"}' yj23-osp13-swift1 (undercloud) [stack@yj23-osp13-deploy ~]$ openstack baremetal node show yj23-osp13-swift1 +------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | Field | Value | +------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | boot_interface | None | | chassis_uuid | None | | clean_step | {} | | console_enabled | False | | console_interface | None | | created_at | 2018-07-25T04:13:38+00:00 | | deploy_interface | None | | driver | pxe_ipmitool | | driver_info | {u'ipmi_port': u'4046', u'ipmi_username': u'admin', u'deploy_kernel': u'78266ebd-d137-4005-88b7-e685ac4b6a47', u'ipmi_address': u'10.23.1.1', u'deploy_ramdisk': u'32edeabd-5dcb-4850-b6ca-b3658b2c9c51', u'ipmi_password': u'******'} | | driver_internal_info | {} | | extra | {u'hardware_swift_object': u'extra_hardware-c69be2c7-5ab9-4cd1-a5bf-107a266427cf'} | | inspect_interface | None | | inspection_finished_at | None | | inspection_started_at | None | | instance_info | {} | | instance_uuid | None | | last_error | None | | maintenance | False | | maintenance_reason | None | | management_interface | None | | name | yj23-osp13-swift1 | | network_interface | flat | | power_interface | None | | power_state | power off | | properties | {u'cpu_arch': u'x86_64', u'root_device': {u'size': u'50'}, u'cpus': u'4', u'capabilities': u'cpu_aes:true,cpu_hugepages:true,boot_option:local,cpu_vt:true,cpu_hugepages_1g:true,boot_mode:bios', u'memory_mb': u'4096', u'local_gb': u'9'} | | provision_state | available | | provision_updated_at | 2018-07-25T04:18:16+00:00 | | raid_config | {} | | raid_interface | None | | reservation | None | | resource_class | baremetal | | storage_interface | noop | | target_power_state | None | | target_provision_state | None | | target_raid_config | {} | | updated_at | 2018-07-25T04:25:36+00:00 | | uuid | c69be2c7-5ab9-4cd1-a5bf-107a266427cf | | vendor_interface | None | +------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+root disk는 size나 vendor, wwn등으로 줄 수 있다. 여기서는 size로 주었다 size로 줄 때 GB 단위의 값으로 넣어 준다.

-

ceph install

(undercloud) [root@yj23-osp13-deploy group_vars]# grep -iv '^$\|^#' /etc/ansible/hosts [mons] 10.23.1.31 10.23.1.32 10.23.1.33 [osds] 10.23.1.31 10.23.1.32 10.23.1.33 [mgrs] 10.23.1.31 10.23.1.32 10.23.1.33 [mdss] 10.23.1.31 10.23.1.32 10.23.1.33 [rgws] 10.23.1.31 10.23.1.32 10.23.1.33 (undercloud) [root@yj23-osp13-deploy group_vars]# (undercloud) [root@yj23-osp13-deploy group_vars]# grep -iv '^$\|^#' all.yml --- dummy: fetch_directory: ~/ceph-ansible-keys ceph_repository_type: cdn ceph_origin: repository ceph_repository: rhcs ceph_rhcs_version: 3 fsid: e05eb6ce-9c8c-4f64-937f-7b977fd7ba33 generate_fsid: false cephx: true monitor_interface: eth1.30 monitor_address: 10.23.30.31,10.23.30.32,10.23.30.33 monitor_address_block: 10.23.30.0/24 ip_version: ipv4 journal_size: 256 public_network: 10.23.30.0/24 cluster_network: 10.23.40.0/24 osd_objectstore: bluestore radosgw_civetweb_port: 8080 radosgw_civetweb_num_threads: 50 radosgw_interface: eth1.30 os_tuning_params: - { name: fs.file-max, value: 26234859 } - { name: vm.zone_reclaim_mode, value: 0 } - { name: vm.swappiness, value: 10 } - { name: vm.min_free_kbytes, value: "{{ vm_min_free_kbytes }}" } openstack_config: true openstack_glance_pool: name: "images" pg_num: 64 pgp_num: 64 rule_name: "replicated_rule" type: 1 erasure_profile: "" expected_num_objects: "" openstack_cinder_pool: name: "volumes" pg_num: 64 pgp_num: 64 rule_name: "replicated_rule" type: 1 erasure_profile: "" expected_num_objects: "" openstack_nova_pool: name: "vms" pg_num: 64 pgp_num: 64 rule_name: "replicated_rule" type: 1 erasure_profile: "" expected_num_objects: "" openstack_cinder_backup_pool: name: "backups" pg_num: 64 pgp_num: 64 rule_name: "replicated_rule" type: 1 erasure_profile: "" expected_num_objects: "" openstack_gnocchi_pool: name: "metrics" pg_num: 64 pgp_num: 64 rule_name: "replicated_rule" type: 1 erasure_profile: "" expected_num_objects: "" openstack_pools: - "{{ openstack_glance_pool }}" - "{{ openstack_cinder_pool }}" - "{{ openstack_nova_pool }}" - "{{ openstack_cinder_backup_pool }}" - "{{ openstack_gnocchi_pool }}" openstack_keys: - { name: client.glance, caps: { mon: "profile rbd", osd: "profile rbd pool=volumes, profile rbd pool={{ openstack_glance_pool.name }}"}, mode: "0600" } - { name: client.cinder, caps: { mon: "profile rbd", osd: "profile rbd pool={{ openstack_cinder_pool.name }}, profile rbd pool={{ openstack_nova_pool.name }}, profile rbd pool={{ openstack_glance_pool.name }}"}, mode: "0600" } - { name: client.cinder-backup, caps: { mon: "profile rbd", osd: "profile rbd pool={{ openstack_cinder_backup_pool.name }}"}, mode: "0600" } - { name: client.gnocchi, caps: { mon: "profile rbd", osd: "profile rbd pool={{ openstack_gnocchi_pool.name }}"}, mode: "0600", } - { name: client.openstack, caps: { key: "AQC7XjtbAAAAABAABV3Ak9fWPwqSjHq2UiNGDw==", mon: "profile rbd", osd: "profile rbd pool={{ openstack_glance_pool.name }}, profile rbd pool={{ openstack_nova_pool.name }}, profile rbd pool={{ openstack_cinder_pool.name }}, profile rbd pool={{ openstack_cinder_backup_pool.name }}"}, mode: "0600" } (undercloud) [root@yj23-osp13-deploy group_vars]# (undercloud) [root@yj23-osp13-deploy group_vars]# grep -iv '^$\|^#' mons.yml --- dummy: (undercloud) [root@yj23-osp13-deploy group_vars]# grep -iv '^$\|^#' osds.yml --- dummy: osd_scenario: collocated devices: - /dev/vdb - /dev/vdc - /dev/vdd (undercloud) [root@yj23-osp13-deploy group_vars]# grep -iv '^$\|^#' rgws.yml --- dummy: (undercloud) [root@yj23-osp13-deploy group_vars]# grep -iv '^$\|^#' mdss.yml --- dummy: copy_admin_key: true ceph_mds_docker_memory_limit: 2g ceph_mds_docker_cpu_limit: 1 (undercloud) [root@yj23-osp13-deploy group_vars]#설치 전 subscription manager에서 repository를 enable하는 구문을 뺀다.

(undercloud) [root@yj23-osp13-deploy ceph-ansible]# cat roles/ceph-common/tasks/installs/prerequisite_rhcs_cdn_install.yml --- - name: check if the red hat storage monitor repo is already present shell: yum --noplugins --cacheonly repolist | grep -sq rhel-7-server-rhceph-{{ ceph_rhcs_version }}-mon-rpms changed_when: false failed_when: false register: rhcs_mon_repo check_mode: no when: - mon_group_name in group_names #- name: enable red hat storage monitor repository # command: subscription-manager repos --enable rhel-7-server-rhceph-{{ ceph_rhcs_version }}-mon-rpms # changed_when: false # when: # - mon_group_name in group_names # - rhcs_mon_repo.rc != 0 - name: check if the red hat storage osd repo is already present shell: yum --noplugins --cacheonly repolist | grep -sq rhel-7-server-rhceph-{{ ceph_rhcs_version }}-osd-rpms changed_when: false failed_when: false register: rhcs_osd_repo check_mode: no when: - osd_group_name in group_names #- name: enable red hat storage osd repository # command: subscription-manager repos --enable rhel-7-server-rhceph-{{ ceph_rhcs_version }}-osd-rpms # changed_when: false # when: # - osd_group_name in group_names # - rhcs_osd_repo.rc != 0 - name: check if the red hat storage tools repo is already present shell: yum --noplugins --cacheonly repolist | grep -sq rhel-7-server-rhceph-{{ ceph_rhcs_version }}-tools-rpms changed_when: false failed_when: false register: rhcs_tools_repo check_mode: no when: - (rgw_group_name in group_names or mds_group_name in group_names or nfs_group_name in group_names or iscsi_gw_group_name in group_names or client_group_name in group_names) #- name: enable red hat storage tools repository # command: subscription-manager repos --enable rhel-7-server-rhceph-{{ ceph_rhcs_version }}-tools-rpms # changed_when: false # when: # - (rgw_group_name in group_names or mds_group_name in group_names or nfs_group_name in group_names or iscsi_gw_group_name in group_names or client_group_name in group_names) # - rhcs_tools_repo.rc != 0주석처리를 하였음.

(undercloud) [root@yj23-osp13-deploy ceph-ansible]# ansible-playbook site.yml ... PLAY RECAP ********************************************************************************************************************************************************************************************************* 10.23.1.31 : ok=342 changed=44 unreachable=0 failed=0 10.23.1.32 : ok=314 changed=43 unreachable=0 failed=0 10.23.1.33 : ok=322 changed=51 unreachable=0 failed=0 INSTALLER STATUS *************************************************************************************************************************************************************************************************** Install Ceph Monitor : Complete (0:05:40) Install Ceph Manager : Complete (0:01:13) Install Ceph OSD : Complete (0:02:07) Install Ceph MDS : Complete (0:01:10) Install Ceph RGW : Complete (0:00:51) Wednesday 25 July 2018 13:50:41 +0900 (0:00:00.131) 0:11:07.796 ******** =============================================================================== ceph-common : install redhat ceph-mon package -------------------------------------------------------------------------------------------------------------------------------------------------------------- 99.24s ceph-common : install redhat ceph-common ------------------------------------------------------------------------------------------------------------------------------------------------------------------- 94.40s ceph-mgr : install ceph-mgr package on RedHat or SUSE ------------------------------------------------------------------------------------------------------------------------------------------------------ 25.57s ceph-osd : manually prepare ceph "bluestore" non-containerized osd disk(s) with collocated osd data and journal -------------------------------------------------------------------------------------------- 23.76s ceph-common : install redhat dependencies ------------------------------------------------------------------------------------------------------------------------------------------------------------------ 23.30s ceph-mds : install redhat ceph-mds package ----------------------------------------------------------------------------------------------------------------------------------------------------------------- 17.44s ceph-common : install redhat ceph-osd package -------------------------------------------------------------------------------------------------------------------------------------------------------------- 16.00s ceph-mon : collect admin and bootstrap keys ---------------------------------------------------------------------------------------------------------------------------------------------------------------- 13.26s ceph-osd : copy to other mons the openstack cephx key(s) --------------------------------------------------------------------------------------------------------------------------------------------------- 11.44s ceph-config : generate ceph configuration file: ceph.conf -------------------------------------------------------------------------------------------------------------------------------------------------- 10.91s ceph-osd : create openstack cephx key(s) -------------------------------------------------------------------------------------------------------------------------------------------------------------------- 7.07s ceph-osd : create openstack pool(s) ------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 6.74s ceph-common : install redhat ceph-radosgw package ----------------------------------------------------------------------------------------------------------------------------------------------------------- 6.50s ceph-common : install ntp ----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 5.93s ceph-osd : activate osd(s) when device is a disk ------------------------------------------------------------------------------------------------------------------------------------------------------------ 5.00s ceph-defaults : create ceph initial directories ------------------------------------------------------------------------------------------------------------------------------------------------------------- 4.45s ceph-mon : create ceph mgr keyring(s) when mon is not containerized ----------------------------------------------------------------------------------------------------------------------------------------- 4.44s ceph-defaults : create ceph initial directories ------------------------------------------------------------------------------------------------------------------------------------------------------------- 4.05s ceph-defaults : create ceph initial directories ------------------------------------------------------------------------------------------------------------------------------------------------------------- 3.97s ceph-osd : list existing pool(s) ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 3.96s (undercloud) [root@yj23-osp13-deploy ceph-ansible]# [root@yj23-osp13-ceph1 ~]# ceph -s cluster: id: e05eb6ce-9c8c-4f64-937f-7b977fd7ba33 health: HEALTH_OK services: mon: 3 daemons, quorum yj23-osp13-ceph1,yj23-osp13-ceph2,yj23-osp13-ceph3 mgr: yj23-osp13-ceph1(active), standbys: yj23-osp13-ceph2, yj23-osp13-ceph3 mds: cephfs-1/1/1 up {0=yj23-osp13-ceph3=up:active}, 2 up:standby osd: 9 osds: 9 up, 9 in rgw: 3 daemons active data: pools: 11 pools, 368 pgs objects: 212 objects, 5401 bytes usage: 9547 MB used, 81702 MB / 91250 MB avail pgs: 368 active+clean [root@yj23-osp13-ceph1 ~]# ceph df GLOBAL: SIZE AVAIL RAW USED %RAW USED 91250M 81702M 9547M 10.46 POOLS: NAME ID USED %USED MAX AVAIL OBJECTS images 1 0 0 77138M 0 volumes 2 0 0 77138M 0 vms 3 0 0 77138M 0 backups 4 0 0 77138M 0 metrics 5 0 0 77138M 0 cephfs_data 6 0 0 25712M 0 cephfs_metadata 7 2246 0 25712M 21 .rgw.root 8 3155 0 25712M 8 default.rgw.control 9 0 0 25712M 8 default.rgw.meta 10 0 0 25712M 0 default.rgw.log 11 0 0 25712M 175 [root@yj23-osp13-ceph1 ~]#

openstack overcloud 준비

-

각 원하는 node에 원하는 hostname 넣기.

(undercloud) [stack@yj23-osp13-deploy deploy]$ openstack baremetal node set --property capabilities='node:controller-0,boot_option:local' yj23-osp13-con1 (undercloud) [stack@yj23-osp13-deploy deploy]$ openstack baremetal node set --property capabilities='node:controller-1,boot_option:local' yj23-osp13-con2 (undercloud) [stack@yj23-osp13-deploy deploy]$ openstack baremetal node set --property capabilities='node:controller-2,boot_option:local' yj23-osp13-con3 (undercloud) [stack@yj23-osp13-deploy deploy]$ openstack baremetal node set --property capabilities='node:compute-0,boot_option:local' yj23-osp13-com1 (undercloud) [stack@yj23-osp13-deploy deploy]$ openstack baremetal node set --property capabilities='node:compute-1,boot_option:local' yj23-osp13-com2 (undercloud) [stack@yj23-osp13-deploy deploy]$ openstack baremetal node set --property capabilities='node:objectstorage-0,boot_option:local' yj23-osp13-swift1 (undercloud) [stack@yj23-osp13-deploy deploy]$ openstack baremetal node show yj23-osp13-con1 +------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | Field | Value | +------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+ | boot_interface | None | | chassis_uuid | None | | clean_step | {} | | console_enabled | False | | console_interface | None | | created_at | 2018-07-25T04:13:29+00:00 | | deploy_interface | None | | driver | pxe_ipmitool | | driver_info | {u'ipmi_port': u'4041', u'ipmi_username': u'admin', u'deploy_kernel': u'78266ebd-d137-4005-88b7-e685ac4b6a47', u'ipmi_address': u'10.23.1.1', u'deploy_ramdisk': u'32edeabd-5dcb-4850-b6ca-b3658b2c9c51', u'ipmi_password': u'******'} | | driver_internal_info | {} | | extra | {u'hardware_swift_object': u'extra_hardware-96a5d2bf-a255-4a4a-a8bf-1b356c0b1a18'} | | inspect_interface | None | | inspection_finished_at | None | | inspection_started_at | None | | instance_info | {} | | instance_uuid | None | | last_error | None | | maintenance | False | | maintenance_reason | None | | management_interface | None | | name | yj23-osp13-con1 | | network_interface | flat | | power_interface | None | | power_state | power off | | properties | {u'cpu_arch': u'x86_64', u'root_device': {u'size': u'50'}, u'cpus': u'4', u'capabilities': u'node:controller-0,boot_option:local', u'memory_mb': u'12288', u'local_gb': u'49'} | | provision_state | available | | provision_updated_at | 2018-07-25T04:18:16+00:00 | | raid_config | {} | | raid_interface | None | | reservation | None | | resource_class | baremetal | | storage_interface | noop | | target_power_state | None | | target_provision_state | None | | target_raid_config | {} | | updated_at | 2018-07-25T04:51:04+00:00 | | uuid | 96a5d2bf-a255-4a4a-a8bf-1b356c0b1a18 | | vendor_interface | None | +------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

openstack overcloud deploy

-

deploy command

(undercloud) [stack@yj23-osp13-deploy ~]$ cat deploy/deploy-sahara-ovn-barbican-manila.sh #!/bin/bash openstack overcloud deploy --templates \ --stack yj23-osp13 \ -e /home/stack/templates/overcloud_images_local_barbican.yaml \ -e /home/stack/templates/node-info.yaml \ -e /home/stack/templates/scheduler-hints.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \ -e /home/stack/templates/network-environment.yaml \ -e /home/stack/templates/ips-from-pool-all.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/services-docker/neutron-ovn-dvr-ha.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/services/sahara.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/low-memory-usage.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/manila-cephfsnative-config-docker.yaml \ -e /home/stack/templates/external-ceph.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/services/barbican.yaml \ -e /usr/share/openstack-tripleo-heat-templates/environments/barbican-backend-simple-crypto.yaml \ -e /home/stack/templates/barbican-configure.yml \ --libvirt-type kvm \ --timeout 100 \ --ntp-server 192.1.1.1 \ --log-file=log/overcloud_deploy-`date +%Y%m%d-%H:%M`.log

-

-

/home/stack/templates/overcloud_images_local_barbican.yaml 이건 위에서 만든 docker image path가 정의 된 file

-

overcloud의 flavor와 댓수 설정, overcloud의 hostname지정을 customizing 하려면 flavor를 baremetal로 줘야 한다.

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#' /home/stack/templates/node-info.yaml parameter_defaults: #OvercloudControllerFlavor: control #OvercloudComputeFlavor: compute #OvercloudSwiftStorageFlavor: swift-storage OvercloudControllerFlavor: baremetal OvercloudComputeFlavor: baremetal OvercloudSwiftStorageFlavor: baremetal ControllerCount: 3 ComputeCount: 1 ObjectStorageCount: 1 (undercloud) [stack@yj23-osp13-deploy ~]$

-

위에서 설정한 capabilities를 이용해서 scheduler에게 node의 hint를 알려준다. hostnamemap은 <stack name>-<node id> 이렇게 들어가는데 yj23-osp13-controller-0은 stack name이 yj23-osp13이고, node id가 controller-0이다. 이것을 yj23-osp13-con1로 바꾸겠다는 뜻이다.

(undercloud) [stack@yj23-osp13-deploy ~]$ cat /home/stack/templates/scheduler-hints.yaml parameter_defaults: ControllerSchedulerHints: 'capabilities:node': 'controller-%index%' NovaComputeSchedulerHints: 'capabilities:node': 'compute-%index%' ObjectStorageSchedulerHints: 'capabilities:node': 'objectstorage-%index%' HostnameMap: yj23-osp13-controller-0: yj23-osp13-con1 yj23-osp13-controller-1: yj23-osp13-con2 yj23-osp13-controller-2: yj23-osp13-con3 yj23-osp13-compute-0: yj23-osp13-com1 yj23-osp13-compute-1: yj23-osp13-com2 yj23-osp13-objectstorage-0: yj23-osp13-swift1 (undercloud) [stack@yj23-osp13-deploy ~]$ - /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml 이 file은 각 network를 나눠서 설정 할 때 필요하다. 실제 파일은 없고 배포 때 jinja2 template에 의해 동적으로 만들어진다.

-

아래는 실제적인 network config이다. resource_registry에서 어떤 nic에 어떤 network를 쓸지 정하게 된다.

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /home/stack/templates/network-environment.yaml resource_registry: OS::TripleO::Compute::Net::SoftwareConfig: /home/stack/templates/nic-configs/custom-configs/compute.yaml OS::TripleO::Controller::Net::SoftwareConfig: /home/stack/templates/nic-configs/custom-configs/controller.yaml OS::TripleO::ObjectStorage::Net::SoftwareConfig: /home/stack/templates/nic-configs/custom-configs/swift-storage.yaml parameter_defaults: TimeZone: 'Asia/Seoul' ControlFixedIPs: [{'ip_address':'192.1.1.10'}] InternalApiVirtualFixedIPs: [{'ip_address':'172.17.0.10'}] PublicVirtualFixedIPs: [{'ip_address':'192.168.92.110'}] StorageVirtualFixedIPs: [{'ip_address':'10.23.30.10'}] StorageMgmtVirtualFixedIPs: [{'ip_address':'10.23.40.10'}] AdminPassword: adminpassword ControlPlaneSubnetCidr: '24' ControlPlaneDefaultRoute: 192.1.1.1 EC2MetadataIp: 192.1.1.1 # Generally the IP of the Undercloud InternalApiNetCidr: 172.17.0.0/24 StorageNetCidr: 10.23.30.0/24 StorageMgmtNetCidr: 10.23.40.0/24 TenantNetCidr: 172.16.0.0/24 ExternalNetCidr: 192.168.0.0/16 InternalApiNetworkVlanID: 20 StorageNetworkVlanID: 30 StorageMgmtNetworkVlanID: 40 TenantNetworkVlanID: 50 InternalApiAllocationPools: [{'start': '172.17.0.10', 'end': '172.17.0.30'}] StorageAllocationPools: [{'start': '10.23.30.10', 'end': '10.23.30.30'}] StorageMgmtAllocationPools: [{'start': '10.23.40.10', 'end': '10.23.40.30'}] TenantAllocationPools: [{'start': '172.16.0.10', 'end': '172.16.0.30'}] ExternalAllocationPools: [{'start': '192.168.92.111', 'end': '192.168.92.140'}] ExternalInterfaceDefaultRoute: 192.168.92.1 DnsServers: ["10.23.1.1"] NeutronNetworkType: 'geneve' NeutronTunnelTypes: 'vxlan' NeutronNetworkVLANRanges: 'datacentre,tenant:1:1000' NeutronBridgeMappings: 'tenant:br-tenant,datacentre:br-ex' BondInterfaceOvsOptions: "bond_mode=active-backup" (undercloud) [stack@yj23-osp13-deploy ~]$-

아래는 controller의 nic 설정이다. resources 부분이 중요한데 아래와 같이 nic들을 어떻게 사용할 지 지정할 수 있다. nic1은 eth0과 같다. network driver에 따라 nic 이름이 바뀌는데 nic1을 eth0으로 바꿔 적어도 무방하다.

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /home/stack/templates/nic-configs/custom-configs/controller.yaml heat_template_version: pike description: > ... resources: OsNetConfigImpl: type: OS::Heat::SoftwareConfig properties: group: script config: str_replace: template: get_file: /usr/share/openstack-tripleo-heat-templates/network/scripts/run-os-net-config.sh params: $network_config: network_config: - type: ovs_bridge name: br-ctlplane use_dhcp: false addresses: - ip_netmask: list_join: - / - - get_param: ControlPlaneIp - get_param: ControlPlaneSubnetCidr routes: - ip_netmask: 169.254.169.254/32 next_hop: get_param: EC2MetadataIp members: - type: interface name: nic1 primary: true use_dhcp: false - type: ovs_bridge name: br-ex use_dhcp: false dns_servers: get_param: DnsServers addresses: - ip_netmask: get_param: ExternalIpSubnet routes: - default: true next_hop: get_param: ExternalInterfaceDefaultRoute members: - type: interface name: nic6 primary: true use_dhcp: false - type: ovs_bridge name: br-con-bond1 use_dhcp: true members: - type: ovs_bond name: con-bond1 ovs_options: get_param: BondInterfaceOvsOptions members: - type: interface name: nic2 primary: true - type: interface name: nic3 - type: interface name: nic4 - type: vlan use_dhcp: false vlan_id: get_param: InternalApiNetworkVlanID addresses: - ip_netmask: get_param: InternalApiIpSubnet - type: vlan use_dhcp: false vlan_id: get_param: StorageNetworkVlanID addresses: - ip_netmask: get_param: StorageIpSubnet - type: vlan use_dhcp: false vlan_id: get_param: StorageMgmtNetworkVlanID addresses: - ip_netmask: get_param: StorageMgmtIpSubnet - type: vlan use_dhcp: false vlan_id: get_param: TenantNetworkVlanID addresses: - ip_netmask: get_param: TenantIpSubnet outputs: OS::stack_id: description: The OsNetConfigImpl resource. value: get_resource: OsNetConfigImpl (undercloud) [stack@yj23-osp13-deploy ~]$ -

compute node의 nic config

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /home/stack/templates/nic-configs/custom-configs/compute.yaml heat_template_version: pike description: > ... resources: [16/9525] OsNetConfigImpl: type: OS::Heat::SoftwareConfig properties: group: script config: str_replace: template: get_file: /usr/share/openstack-tripleo-heat-templates/network/scripts/run-os-net-config.sh params: $network_config: network_config: - type: ovs_bridge name: br_ctlplane use_dhcp: false dns_servers: get_param: DnsServers addresses: - ip_netmask: list_join: - / - - get_param: ControlPlaneIp - get_param: ControlPlaneSubnetCidr routes: - ip_netmask: 169.254.169.254/32 next_hop: get_param: EC2MetadataIp - default: true next_hop: get_param: ControlPlaneDefaultRoute members: - type: interface name: nic1 primary: true - type: ovs_bridge name: br-com-bond1 use_dhcp: true members: - type: ovs_bond name: com-bond1 ovs_options: get_param: BondInterfaceOvsOptions members: - type: interface name: nic2 primary: true - type: interface name: nic3 - type: interface name: nic4 - type: vlan use_dhcp: false vlan_id: get_param: InternalApiNetworkVlanID addresses: - ip_netmask: get_param: InternalApiIpSubnet - type: vlan use_dhcp: false vlan_id: get_param: StorageNetworkVlanID addresses: - ip_netmask: get_param: StorageIpSubnet - type: vlan use_dhcp: false vlan_id: get_param: TenantNetworkVlanID addresses: - ip_netmask: get_param: TenantIpSubnet outputs: OS::stack_id: description: The OsNetConfigImpl resource. value: get_resource: OsNetConfigImpl (undercloud) [stack@yj23-osp13-deploy ~]$ -

swift node의 nic config

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /home/stack/templates/nic-configs/custom-configs/swift-storage.yaml [87/977] heat_template_version: pike description: > ... resources: OsNetConfigImpl: type: OS::Heat::SoftwareConfig properties: group: script config: str_replace: template: get_file: /usr/share/openstack-tripleo-heat-templates/network/scripts/run-os-net-config.sh params: $network_config: network_config: - type: ovs_bridge name: br-ctlplane use_dhcp: false addresses: - ip_netmask: list_join: - / - - get_param: ControlPlaneIp - get_param: ControlPlaneSubnetCidr routes: - ip_netmask: 169.254.169.254/32 next_hop: get_param: EC2MetadataIp members: - type: interface name: nic1 primary: true use_dhcp: false - type: ovs_bridge name: br-swift-bond1 use_dhcp: true members: - type: ovs_bond name: swift-bond1 ovs_options: get_param: BondInterfaceOvsOptions members: - type: interface name: nic2 primary: true - type: interface name: nic3 - type: interface name: nic4 - type: vlan use_dhcp: false vlan_id: get_param: InternalApiNetworkVlanID addresses: - ip_netmask: get_param: InternalApiIpSubnet - type: vlan use_dhcp: false vlan_id: get_param: StorageNetworkVlanID addresses: - ip_netmask: get_param: StorageIpSubnet - type: vlan use_dhcp: false vlan_id: get_param: StorageMgmtNetworkVlanID addresses: - ip_netmask: get_param: StorageMgmtIpSubnet outputs: OS::stack_id: description: The OsNetConfigImpl resource. value: get_resource: OsNetConfigImpl (undercloud) [stack@yj23-osp13-deploy ~]$

-

-

특정 node에 ip 지정, controller의 external ip의 경우 con1이 192.168.92.111, con2가 192.168.92.112, con3이 192.168.92.113을 가져가게 된다.

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /home/stack/templates/ips-from-pool-all.yaml resource_registry: OS::TripleO::Controller::Ports::ExternalPort: /usr/share/openstack-tripleo-heat-templates/network/ports/external_from_pool.yaml OS::TripleO::Controller::Ports::InternalApiPort: /usr/share/openstack-tripleo-heat-templates/network/ports/internal_api_from_pool.yaml OS::TripleO::Controller::Ports::StoragePort: /usr/share/openstack-tripleo-heat-templates/network/ports/storage_from_pool.yaml OS::TripleO::Controller::Ports::StorageMgmtPort: /usr/share/openstack-tripleo-heat-templates/network/ports/storage_mgmt_from_pool.yaml OS::TripleO::Controller::Ports::TenantPort: /usr/share/openstack-tripleo-heat-templates/network/ports/tenant_from_pool.yaml OS::TripleO::Compute::Ports::ExternalPort: /usr/share/openstack-tripleo-heat-templates/network/ports/noop.yaml OS::TripleO::Compute::Ports::InternalApiPort: /usr/share/openstack-tripleo-heat-templates/network/ports/internal_api_from_pool.yaml OS::TripleO::Compute::Ports::StoragePort: /usr/share/openstack-tripleo-heat-templates/network/ports/storage_from_pool.yaml OS::TripleO::Compute::Ports::StorageMgmtPort: /usr/share/openstack-tripleo-heat-templates/network/ports/noop.yaml OS::TripleO::Compute::Ports::TenantPort: /usr/share/openstack-tripleo-heat-templates/network/ports/tenant_from_pool.yaml OS::TripleO::CephStorage::Ports::ExternalPort: /usr/share/openstack-tripleo-heat-templates/network/ports/noop.yaml OS::TripleO::CephStorage::Ports::InternalApiPort: /usr/share/openstack-tripleo-heat-templates/network/ports/noop.yaml OS::TripleO::CephStorage::Ports::StoragePort: /usr/share/openstack-tripleo-heat-templates/network/ports/storage_from_pool.yaml OS::TripleO::CephStorage::Ports::StorageMgmtPort: /usr/share/openstack-tripleo-heat-templates/network/ports/storage_mgmt_from_pool.yaml OS::TripleO::CephStorage::Ports::TenantPort: /usr/share/openstack-tripleo-heat-templates/network/ports/noop.yaml OS::TripleO::ObjectStorage::Ports::ExternalPort: /usr/share/openstack-tripleo-heat-templates/network/ports/noop.yaml OS::TripleO::ObjectStorage::Ports::InternalApiPort: /usr/share/openstack-tripleo-heat-templates/network/ports/internal_api_from_pool.yaml OS::TripleO::ObjectStorage::Ports::StoragePort: /usr/share/openstack-tripleo-heat-templates/network/ports/storage_from_pool.yaml OS::TripleO::ObjectStorage::Ports::StorageMgmtPort: /usr/share/openstack-tripleo-heat-templates/network/ports/storage_mgmt_from_pool.yaml OS::TripleO::ObjectStorage::Ports::TenantPort: /usr/share/openstack-tripleo-heat-templates/network/ports/noop.yaml OS::TripleO::BlockStorage::Ports::ExternalPort: /usr/share/openstack-tripleo-heat-templates/network/ports/noop.yaml OS::TripleO::BlockStorage::Ports::InternalApiPort: /usr/share/openstack-tripleo-heat-templates/network/ports/internal_api_from_pool.yaml OS::TripleO::BlockStorage::Ports::StoragePort: /usr/share/openstack-tripleo-heat-templates/network/ports/storage_from_pool.yaml OS::TripleO::BlockStorage::Ports::StorageMgmtPort: /usr/share/openstack-tripleo-heat-templates/network/ports/storage_mgmt_from_pool.yaml OS::TripleO::BlockStorage::Ports::TenantPort: /usr/share/openstack-tripleo-heat-templates/network/ports/noop.yaml parameter_defaults: parameter_defaults: ControllerIPs: external: - 192.168.92.111 - 192.168.92.112 - 192.168.92.113 internal_api: - 172.17.0.11 - 172.17.0.12 - 172.17.0.13 storage: - 10.23.30.11 - 10.23.30.12 - 10.23.30.13 storage_mgmt: - 10.23.40.11 - 10.23.40.12 - 10.23.40.13 tenant: - 172.16.0.11 - 172.16.0.12 - 172.16.0.13 ComputeIPs: internal_api: - 172.17.0.21 - 172.17.0.22 storage: - 10.23.30.21 - 10.23.30.22 tenant: - 172.16.0.21 - 172.16.0.22 SwiftStorageIPs: internal_api: - 172.17.0.41 - 172.17.0.42 storage: - 10.23.30.41 - 10.23.30.42 storage_mgmt: - 10.23.40.41 - 10.23.40.42 (undercloud) [stack@yj23-osp13-deploy ~]$ -

ovn 설정

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /usr/share/openstack-tripleo-heat-templates/environments/services-docker/neutron-ovn-dvr-ha.yaml resource_registry: OS::TripleO::Docker::NeutronMl2PluginBase: ../../puppet/services/neutron-plugin-ml2-ovn.yaml OS::TripleO::Services::OVNController: ../../docker/services/ovn-controller.yaml OS::TripleO::Services::OVNDBs: ../../docker/services/pacemaker/ovn-dbs.yaml OS::TripleO::Services::OVNMetadataAgent: ../../docker/services/ovn-metadata.yaml OS::TripleO::Services::NeutronOvsAgent: OS::Heat::None OS::TripleO::Services::ComputeNeutronOvsAgent: OS::Heat::None OS::TripleO::Services::NeutronL3Agent: OS::Heat::None OS::TripleO::Services::NeutronMetadataAgent: OS::Heat::None OS::TripleO::Services::NeutronDhcpAgent: OS::Heat::None OS::TripleO::Services::ComputeNeutronCorePlugin: OS::Heat::None parameter_defaults: NeutronMechanismDrivers: ovn OVNVifType: ovs OVNNeutronSyncMode: log OVNQosDriver: ovn-qos OVNTunnelEncapType: geneve NeutronEnableDHCPAgent: false NeutronTypeDrivers: 'geneve,vlan,flat' NeutronNetworkType: 'geneve' NeutronServicePlugins: 'qos,ovn-router,trunk' NeutronVniRanges: ['1:65536', ] NeutronEnableDVR: true ControllerParameters: OVNCMSOptions: "enable-chassis-as-gw" (undercloud) [stack@yj23-osp13-deploy ~]$ -

sahara config

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /usr/share/openstack-tripleo-heat-templates/environments/services/sahara.yaml resource_registry: OS::TripleO::Services::SaharaApi: ../../docker/services/sahara-api.yaml OS::TripleO::Services::SaharaEngine: ../../docker/services/sahara-engine.yaml (undercloud) [stack@yj23-osp13-deploy ~]$

-

test를 위해 memory를 적게 사용 하도록 각 daemon의 thread를 1개씩만 만들게끔 함.

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /usr/share/openstack-tripleo-heat-templates/environments/low-memory-usage.yaml parameter_defaults: CinderWorkers: 1 GlanceWorkers: 1 HeatWorkers: 1 KeystoneWorkers: 1 NeutronWorkers: 1 NovaWorkers: 1 SaharaWorkers: 1 SwiftWorkers: 1 GnocchiMetricdWorkers: 1 ApacheMaxRequestWorkers: 100 ApacheServerLimit: 100 ControllerExtraConfig: 'nova::network::neutron::neutron_url_timeout': '60' DatabaseSyncTimeout: 900 CephPoolDefaultSize: 1 CephPoolDefaultPgNum: 32 (undercloud) [stack@yj23-osp13-deploy ~]$ -

cephfs를 manila backend로 쓰기 위한 설정.

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /usr/share/openstack-tripleo-heat-templates/environments/manila-cephfsnative-config-docker.yaml resource_registry: OS::TripleO::Services::ManilaApi: ../docker/services/manila-api.yaml OS::TripleO::Services::ManilaScheduler: ../docker/services/manila-scheduler.yaml OS::TripleO::Services::ManilaShare: ../docker/services/pacemaker/manila-share.yaml OS::TripleO::Services::ManilaBackendCephFs: ../puppet/services/manila-backend-cephfs.yaml parameter_defaults: ManilaCephFSBackendName: cephfs ManilaCephFSDriverHandlesShareServers: false ManilaCephFSCephFSAuthId: 'manila' ManilaCephFSCephFSEnableSnapshots: false ManilaCephFSCephFSProtocolHelperType: 'CEPHFS'

-

ceph를 storage backend로 사용하기 위한 설정.

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /home/stack/templates/external-ceph.yaml parameter_defaults: CephAdminKey: 'AQDeXDtb1riOKxAAp5WhgoMFlV4ozWW5yIKo8A==' CephClientKey: 'AQC7XjtbAAAAABAABV3Ak9fWPwqSjHq2UiNGDw==' CephClientUserName: openstack CephClusterFSID: 'e05eb6ce-9c8c-4f64-937f-7b977fd7ba33' CephExternalMonHost: '10.23.30.31,10.23.30.32,10.23.30.33' CinderEnableIscsiBackend: False CinderEnableRbdBackend: True CinderRbdPoolName: volumes GlanceBackend: rbd GlanceRbdPoolName: images GnocchiBackend: rbd GnocchiRbdPoolName: metrics NovaEnableRbdBackend: True NovaRbdPoolName: vms RbdDefaultFeatures: '' ManilaCephFSDataPoolName: cephfs_data ManilaCephFSMetadataPoolName: cephfs_metadata CephManilaClientKey: 'AQBF9EJbAAAAABAAiUObfszZfU/X11xnfeBG8A==' resource_registry: OS::TripleO::Services::CephClient: OS::Heat::None OS::TripleO::Services::CephExternal: /usr/share/openstack-tripleo-heat-templates/puppet/services/ceph-external.yaml OS::TripleO::Services::CephMon: OS::Heat::None OS::TripleO::Services::CephOSD: OS::Heat::None (undercloud) [stack@yj23-osp13-deploy ~]$

-

barbican을 올리기 위한 설정

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /usr/share/openstack-tripleo-heat-templates/environments/services/barbican.yaml resource_registry: OS::TripleO::Services::BarbicanApi: ../../docker/services/barbican-api.yaml

-

barbican의 암호화 방식 backend를 simple-crypto로 쓰기 위한 설정. 현재 redhat에서는 이것만 지원중.

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /usr/share/openstack-tripleo-heat-templates/environments/barbican-backend-simple-crypto.yaml parameter_defaults: resource_registry: OS::TripleO::Services::BarbicanBackendSimpleCrypto: ../puppet/services/barbican-backend-simple-crypto.yaml

-

barbican parameter 설정

(undercloud) [stack@yj23-osp13-deploy ~]$ grep -iv '^$\|^#\|^[[:space:]]*#' /home/stack/templates/barbican-configure.yml parameter_defaults: BarbicanSimpleCryptoGlobalDefault: true

-

설치 후 manila를 제대로 쓰려면 …아래와 같은 추가 설정이 필요하다.

-

controller의 manila container안에서 /etc/ceph/ directory를 보면 ceph.client.manila.keyring이 만들어져 있는것을 확인 할 수 있다. 그것을 기존 만들어둔 ceph에 import 시킨다.

[root@yj23-osp13-ceph1 ~]# cat ceph.client.manila.keyring [client.manila] key = AQBF9EJbAAAAABAAiUObfszZfU/X11xnfeBG8A== caps mds = "allow *" caps mon = "allow r, allow command \"auth del\", allow command \"auth caps\", allow command \"auth get\", allow command \"auth get-or-create\"" caps osd = "allow rw" [root@yj23-osp13-ceph1 ~]# ceph auth import ceph.client.manila.keyring [root@yj23-osp13-ceph1 ~]# ceph auth export client.manila export auth(auid = 18446744073709551615 key=AQBF9EJbAAAAABAAiUObfszZfU/X11xnfeBG8A== with 3 caps) [client.manila] key = AQBF9EJbAAAAABAAiUObfszZfU/X11xnfeBG8A== caps mds = "allow *" caps mon = "allow r, allow command "auth del", allow command "auth caps", allow command "auth get", allow command "auth get-or-create"" caps osd = "allow rw" [root@yj23-osp13-ceph1 ~]#

rpm dependency 확인. rpm 종속성 확인.

yum -q deplist qemu-kvm-rhev.x86_64

rpm dependency 확인.

iptables debugging trace

linux bridge vxlan vtep 확인

linux bridge를 사용해서 vxlan을 구성 할 시 vtep을 확인 하는 방법…

bridge fdb !!!

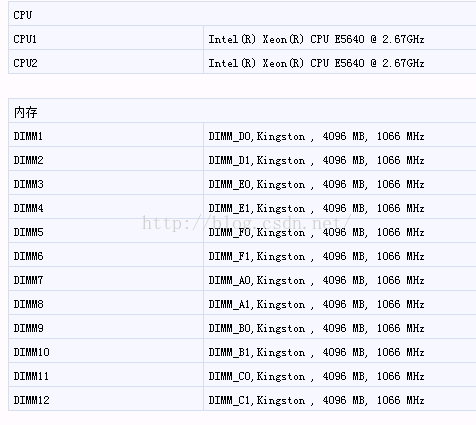

edac ecc error memory 위치 찾기

https://s905060.gitbooks.io/site-reliability-engineer-handbook/how_to_solve_edac_dimm_ce_error.html

$ More /var/log/message

Jul 21 08:54:32 customerkernel: EDAC MC1: 5486 CE error on CPU # 1Channel # 2_DIMM # 1 (channel: 2 slot: 1page: 0x0 offset: 0x0 grain: 8 syndrome: 0x0)

Jul 21 08:54:33 customerkernel: EDAC MC1: 11480 CE error on CPU # 1Channel # 2_DIMM # 1 (channel: 2 slot: 1page: 0x0 offset: 0x0 grain: 8 syndrome: 0x0)

Jul 21 08:54:34 customerkernel: EDAC MC1: 11330 CE error on CPU # 1Channel # 2_DIMM # 1 (channel: 2 slot: 1page: 0x0 offset: 0x0 grain: 8 syndrome: 0x0)

Jul 21 08:54:35 customerkernel: EDAC MC1: 6584 CE error on CPU # 1Channel # 2_DIMM # 1 (channel: 2 slot: 1page: 0x0 offset: 0x0 grain: 8 syndrome: 0x0)

Jul 21 08:54:36 customerkernel: EDAC MC1: 27428 CE error on CPU # 1Channel # 2_DIMM # 1 (channel: 2 slot: 1page: 0x0 offset: 0x0 grain: 8 syndrome: 0x0)

Jul 21 08:54:37 customerkernel: EDAC MC1: 30113 CE error on CPU # 1Channel # 2_DIMM # 1 (channel: 2 slot: 1page: 0x0 offset: 0x0 grain: 8 syndrome: 0x0)

Jul 21 08:54:38 customerkernel: EDAC MC1: 4453 CE error on CPU # 1Channel # 2_DIMM # 1 (channel: 2 slot: 1page: 0x0 offset: 0x0 grain: 8 syndrome: 0x0)

Jul 21 08:54:39 customerkernel: EDAC MC1: 6269 CE error on CPU # 1Channel # 2_DIMM # 1 (channel: 2 slot: 1page: 0x0 offset: 0x0 grain: 8 syndrome: 0x0)

Jul 21 08:54:40 customer kernel: EDAC MC1: 15720 CE error on CPU # 1Channel # 2_DIMM # 1 (channel: 2 slot: 1 page: 0x0offset: 0x0 grain: 8 syndrome: 0x0)

Jul 21 08:54:41 customerkernel: EDAC MC1: 16107 CE error on CPU # 1Channel # 2_DIMM # 1 (channel: 2 slot: 1page: 0x0 offset: 0x0 grain: 8 syndrome: 0x0)

Analysis and solution:

This is [EDAC (Error Detection AndCorrection] (https://www.kernel.org/doc/Documentation/edac.txt) logs.

CE Error Correctable Error is referred to, in addition to UE (Uncorrectable Error)

According to the above documents, identify errors DIMM:

[root @ the Customer log] # grep "[0-9]" / SYS / Devices / System / EDAC / MC / MC * / csrow * / * CH _ce_count

/ Sys / devices / system / edac / mc / mc0 / csrow0 / ch0_ce_count: 0

/ Sys / devices / system / edac / mc / mc0 / csrow0 / ch1_ce_count: 0

/ Sys / devices / system / edac / mc / mc0 / csrow0 / ch2_ce_count: 0

/ Sys / devices / system / edac / mc / mc0 / csrow1 / ch0_ce_count: 0

/ Sys / devices / system / edac / mc / mc0 / csrow1 / ch1_ce_count: 0

/ Sys / devices / system / edac / mc / mc0 / csrow1 / ch2_ce_count: 0

/ Sys / devices / system / edac / mc / mc1 / csrow0 / ch0_ce_count: 0

/ Sys / devices / system / edac / mc / mc1 / csrow0 / ch1_ce_count: 0

/ Sys / devices / system / edac / mc / mc1 / csrow0 / ch2_ce_count: 0

/ Sys / devices / system / edac / mc / mc1 / csrow1 / ch0_ce_count: 0

/ Sys / devices / system / edac / mc / mc1 / csrow1 / ch1_ce_count: 0

/ Sys / devices / system / edac / mc / mc1 / csrow1 / ch2_ce_count: 554836518

Found errors in /mc1/csrow1/ch2, according to the chart:

Channel 0 Channel 1

===================================

csrow0 | DIMM_A0 | DIMM_B0 |

csrow1 | DIMM_A0 | DIMM_B0 |

===================================

===================================

csrow2 | DIMM_A1 | DIMM_B1 |

csrow3 | DIMM_A1 | DIMM_B1 |

===================================

From dmidecode info:

[Root @ customer log] # dmidecode -t memory | grep 'Locator: DIMM'

Locator: DIMM_D0

Locator: DIMM_D1

Locator: DIMM_E0

Locator: DIMM_E1

Locator: DIMM_F0

Locator: DIMM_F1

Locator: DIMM_A0

Locator: DIMM_A1

Locator: DIMM_B0

Locator: DIMM_B1

Locator: DIMM_C0

Locator: DIMM_C1

From server console to find the memory info:

Memory slot on the motherboard of distribution:

Combined error log: kernel: EDAC MC1: 16107 CE error on CPU # 1Channel #2_DIMM # 1 (channel: 2slot: 1 DIMM_F1 memory slots should be a problem.

Solution: Finally, what we need to do is to replace F1 memory slot, after the system reboot the memory issue is no longer happening.

sed 이용해서 원하는 것만 바꾸기

IPADDR=10.10.10.231

GATEWAY=10.10.10.1

IPADDR=10.10.30.231

IPADDR=10.10.40.231

ip의 마지막 옥텟만 바꾸기

sed ‘s/10\.10\.\(.0\)\.231/10.10.\1.232/g’ ifcfg-eth*

IPADDR=10.10.10.232

GATEWAY=10.10.10.1

IPADDR=10.10.30.232

IPADDR=10.10.40.232

docker image의 특정 tag(version)을 찾기

docker image의 특정 tag(version)을 찾기가 쉽지 않다.

docker search로 보면 이름까지만 나오고 tag는 안나온다.

docker homepage에 보면 curl call을 해서 이래 저래 봐라 라고 나오는데 특정 image는 tag가 잘 안나올 때도 있다.(아마 regex 때문인것 같음;)

그래서 찾아보니 pytools라는게 있더라.

사용법도 쉬움. ㅇㅇ

download

git clone https://github.com/HariSekhon/pytools.git |

기본 사용법은 –help 옵션을 붙여서 확인한다.

사용 예(docker hub public repository에 있는 image의 tag(version)을 확인)

root@youngjulee-ThinkPad-S2:/home/youngjulee/src/pytools# ./dockerhub_show_tags.py kolla/centos-binary-kolla-toolbox

DockerHub

repo: kolla/centos-binary-kolla-toolbox

tags: 1.1.1

1.1.2

2.0.1

2.0.2

3.0.1

3.0.2

3.0.3

3.0.3-beta.1

4.0.0

master

|

사용예2(private repository에 있는 image의 tag(version)을 확인)

root@youngjulee-ThinkPad-S2:/home/youngjulee/src/pytools# ./docker_registry_show_tags.py -H repo.opensourcelab.co.kr -u <User> -P <Port> -p <Password> -S kolla/centos-binary-kolla-toolbox

Docker Registry: https://repo.opensourcelab.co.kr:xxxx

repo: kolla/centos-binary-kolla-toolbox

tags: 4.0.2

5.0.0

|

이렇게 쓸 수가 있다.!